After completing her PhD, Assistant Professor in NYU Steinhardt’s Department of Physical Therapy Anat Lubetzky saw an opportunity to expand upon traditional approaches to researching balance. “Typically, this research is done comparing participants’ balance with eyes open to when their eyes are closed,” she said. “We had a different way of looking at how people use visual information for balance — comparing various visual stimuli with participants’ eyes open.”

To investigate the impact of different visual environments on a patient’s balance, Lubetzky and her team developed an application for portable virtual reality (VR) headsets designed for individuals suffering from vestibular disorders. We sat down with Lubetzky to discuss this technology and the benefits of incorporating VR technology into physical therapy.

What do people see when they put on your virtual reality headset?

A still image of an environment shown to patients through Lubetzky’s VR headset application.

First of all, “virtual reality” is such a big term. There is so much diversity in what you can do with it. When you put our app on, you could see, for example, mildly moving stars that most healthy adults don’t even notice are moving. The scientific premise of our paradigm is that when a person observes mildly moving shapes, they tend to match their body sway to the movement. Eventually, this will stop when the speed increases, whereas people with sensory loss might not be able to stop moving with the shapes.

From there, we added a layer of sound. What happens to balance when there are high and low levels of sound and visuals? I’ve recently become interested in the role of hearing loss in balance and how what we hear affects our balance — this is something I’ll be exploring with a recent grant from the Hearing Health Foundation.

You also just received an NIH REACT grant to study “Vestibular Rehabilitation Utilizing Virtual Environments to Train Sensory Integration for Postural Control in a Functional Context.” What inspired you to conduct research that involves VR?

Clinicians using the VR application can choose to display different visual environments, like the virtual city street depicted above.

The number one reason was easier clinical translation. In the past few years, there have been substantial advancements in VR headsets in terms of their simplicity, portability, and affordability. If these tools were to be at every clinic one day, what would be the best way for clinicians to use them?

Our study will compare standard vestibular rehabilitation to people being treated with our VR application. This app offers more diverse visual environments (e.g. a street or train station) and the clinician can choose the intensity of sensory load within each context.

Sometimes with our VR app, patients with vestibular disorders report improvement on the spot — but that’s too fast to reflect a lasting sensory integration change. What I want to find out is: if the app is used consistently, are we really training people to change their sensory integration strategies in the long term?

Who are you working with on this project?

I am fortunate to have a lot of amazing collaborators. I come from a PhD in Rehabilitation Sciences — the philosophy was always that while you could spend thousands of hours trying to become an expert in another field (for example, engineering), it’s better to say, “I’m a physical therapist and I know balance — how about I find an engineer to collaborate with instead.”

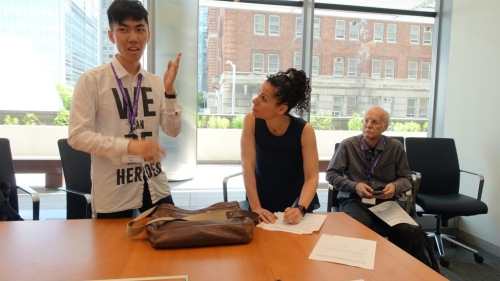

I had never worked with people with vestibular disorders or hearing loss before. That’s why I partnered with my colleagues at Mount Sinai’s Eye and Ear Institute who are experts in vestibular rehabilitation. My team is also made up of people from music technology and computer science who have helped create and code the headset app — we’re a team of seven investigators and more students from every discipline. Each one of us gives our strengths.

Lubetzky takes notes about a participant’s experience with the VR headset’s 3-D sound, which was designed by students in NYU Steinhardt’s music technology program.

What is something you’ve learned from working with such an interdisciplinary team?

Even terms that you think you understand, people use in different ways! Just recently, I completed writing a systematic review of the contribution of auditory cues to balance with a music technology PhD student, a neurotologist, and a vestibular therapist. It took us weeks to understand a simple word like “frequency,” because it meant something different to each one of us. That was an amazing interdisciplinary experience.

What is the most rewarding part of working with students across NYU?

Being a physical therapist is all about helping people. So being able to harness students’ brightness and skills to help people is something that means a lot to me.

Lubetzky’s collaborators include: Ken Perlin, professor of computer science at the Courant Institute of Mathematical Sciences; Bryan Hujsak, Jennifer Kelly, and Maura Cosetti of Mount Sinai’s New York Eye and Ear Institute; Daphna Harel, an associate professor of applied statistics at NYU Steinhardt; and Agnieszka Roginska, associate professor and associate director of NYU Steinhardt’s Music Technology program.