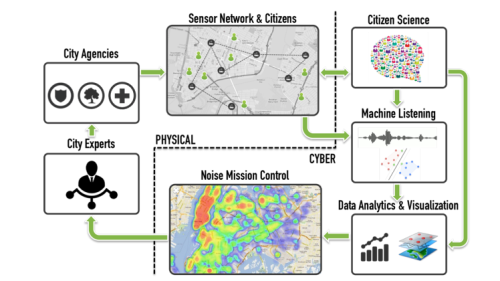

The SONYC Cyber-Physical System

The Sounds of New York City (SONYC)—a first-of-its-kind research project addressing urban noise pollution—has launched a citizen science initiative to help artificial intelligence (AI) technology understand exactly which sounds are contributing to unhealthy levels of noise in New York City. Citizen scientists are members of the public who collaborate with researchers by collecting and analyzing data.

SONYC—a National Science Foundation-funded collaboration between New York University (NYU), the City of New York, and The Ohio State University— is in its third year of a five-year research agenda that leverages machine listening technology, big data analysis, and citizen science to more effectively monitor, analyze, and mitigate urban noise pollution.

The citizen science initiative on the Zooniverse citizen science web portal (zooniverse.org) enlists the help of volunteers to identify and label individual sound sources—such as a jackhammer or an ice cream truck—in 10-second, anonymized urban sound recordings transmitted from acoustic sensors positioned in high-noise environments in the city.

With the help of citizen scientists, machine listening models learn to recognize these sounds on their own, assisting researchers in categorizing sound data collected by sensors over the past two years. This facilitates big data analysis, which, in part, will provide city enforcement officials with a more accurate picture of noise—and its causes—over time.

“It’s impossible for us to sift through this data on our own, but we’ve learned through extensive research how to seamlessly integrate citizen scientists into our systems and advance our understanding of how humans can effectively train machine learning models,” said Juan Pablo Bello, lead investigator, director of the Music and Audio Research Lab (MARL) at NYU Steinhardt.

Ultimately, the SONYC team aims to empower city agencies to implement targeted, data-driven noise mitigation interventions.