Research Areas

Machine Listening

Machine listening broadly includes data-driven methods for analysis and generation of audio. Example projects in this area include: classification of sound events, transcription of music, analysis of speech, generation of acoustic scenes, and so on. Our research in this area is often informed by and integrated with other data modalities, such as images, video, or text.

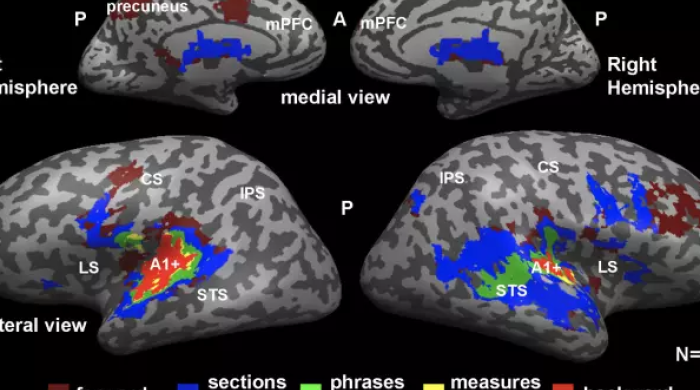

Music Psychology and Cognitive Neuroscience

We use cognitive neuroscience and psychology methods to study how music influences human thoughts, feelings, and behaviors. Examples of this are the study of how we perceive, respond to, and interact with music, including its effects on perception, mood, cognition, emotion, and social interactions, among others. We are also interested in understanding how different brain regions and networks are involved in musical activities, such as listening, performing, and creating music.

Immersive Audio

The field of immersive audio encapsulates the capture, analysis, synthesis, and reproduction of immersive auditory environments, as well as the study of acoustic and psychoacoustic factors in spatial audio. This includes the capture and representation of spatial and 3D sound using binaural, transaural, multi-channel and soundfield techniques, audio display devices, virtual auditory environment simulation, games, characterization, and classification of spaces.

Publications & Resources

MARL's research extends across fields of science and technology under our unifying study of music and sound. Researchers at MARL have published work in journals related to computing, music information retrieval, artificial intelligence, immersive experience, algorithmic composition, human cognition and perception, and neuroimaging of the human brain.

Publications ResourcesPast Projects

-

This project, developing a Reconfigurable Environmental Intelligence Platform (REIP) aims to alleviate many complex aspects of remote sensing, including sensor node design, software stack implementation, privacy issues, bandwidth, and centralized compute limitations, bringing down start up times from years to weeks. REIP will be a plug-and-play remote sensing infrastructure with advanced edge processing capabilities for in situ- insight generation. Sensor networks have dynamically expanded our ability to monitor and study our world Sensor networks have already deployed specialized sensor networks for many applications, including monitoring pedestrian traffic and outdoor noise monitoring and the need for sensing networks keeps increasing as the use cases for sensor networks expands and becomes more complex. Sensors no longer simply record data, they process and transforms it into something useful before sending it to central servers.

-

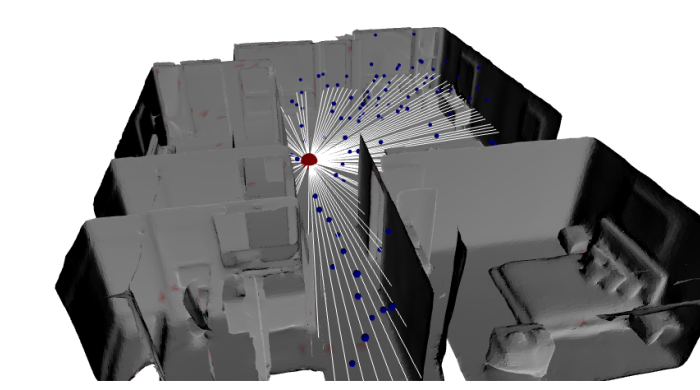

Sound is rich with information about the surrounding environment. If you stand on a city sidewalk with your eyes closed and listen, you will hear the sounds of events happening around you: birds chirping, squirrels scurrying, people talking, doors opening, an ambulance speeding, a truck idling. In addition, you will also likely be able to perceive the location of each sound source, where it’s going, and how fast it’s moving. This project will build innovative technologies to allow computers to extract this rich information out of sound. By not only identifying which sound sources are present but also estimating the spatial location and movement of each sound source, sound sensing technology will be able to better describe our environments with microphone-enabled everyday devices, e.g. smartphones, headphones, smart speakers, hearing-aids, home camera, and mixed-reality headsets. For hearing impaired individuals, the developed technologies have the potential to alert them to dangerous situations in urban or domestic environments. For city agencies, acoustic sensors will be able to more accurately quantify traffic, construction, and other activities in urban environments. For ecologists, this technology can help them more accurately monitor and study wildlife. In addition, this information complements what computer vision can sense, as sound can include information about events that are not easily visible, such as sources that are small (e.g., insects), far away (e.g., a distant jackhammer), or simply hidden behind another object (e.g., an incoming ambulance around a building’s corner).

-

The broader impact/commercial potential of this project is the use of embedded machine listening as a low-cost, turnkey solution for early detection of machinery malfunction and improve predictive maintenance. In manufacturing, sound-based condition monitoring coupled with data-driven maintenance can help significantly reduce unscheduled work stoppages, faulty products and waste of raw materials. Building management systems can be augmented by integrating real-time condition updates for critical machinery such as HVAC units, elevators, boilers and pumps, minimizing disruption for managers and users of those services. This technology is flexible, accurate and data-driven, potentially providing a low barrier to adoption for prospective customers and adaptability to various markets. Beyond predictive maintenance, applications include noise level monitoring for ensuring compliance in workplaces and airports, home and building security, early alert for traffic accidents, bio-acoustic monitoring of animal species, and outdoor noise monitoring at scale for improved enforcement in smart cities.

This project further develops research at the intersection of artificial intelligence and the internet of things. The technology consists of a calibrated and highly accurate acoustic sensor with embedded sound recognition AI based on deep learning. Sound conveys critical information about the environment that often cannot be measured by other means. In manufacturing, early stage machinery malfunction can be indicated by abnormal acoustic emissions. In smart homes and buildings, sound can be monitored for signs of alarm, distress or compliance. Sound sensing is omnidirectional and robust to occlusion and contextual variables such as lightning conditions at different times of the day. Many existing solutions cannot identify different types of sounds or complex acoustic patterns, making them unsuited for these applications. This solution is both low cost and capable of identifying events and sources at the network edge, thus eliminating the need for sensitive audio information to be transmitted.

-

Citygram is a large-scale project that began in 2011. Citygram aims to deliver a real-time visualization/mapping system focusing on non-ocular energies through scale-accurate, non-intrusive, and data-driven interactive digital maps. The first iteration, Citygram One, focuses on exploring spatio-acoustic energies to reveal meaningful information including spatial loudness, traffic patterns, noise pollution, and emotion/mood through audio signal processing and machine learning techniques.

Citygram aims to create a model for visualizing and applying computational techniques to produce metrics and further our knowledge of cities such as NYC and LA. The project will enable a richer representation and understanding of cities defined by humans, visible/invisible energies, buildings, machines, and other biotic/abiotic entities. Our freely Internet-accessible system will yield high impact results that are general enough for a wide range of applications for residents, businesses, and visitors to cities like NYC and LA.

Citygram is a long-term, iterative, and infrastructural project – subsequent iterations will focus on additional energy formats including humidity, light, and color.

-

The aim of this project is to automatically generate musical accompaniments for a given melodic sequence. Finite state machines are used frequently in speech recognition systems to model sequential symbolic data and mappings between symbol sequences.

In an automatic speech recognition system, a finite state transducer, which maps an input sequence to an ouptut sequence, is used to map sequences of phonemes to words. An n-gram, represented as a finite state automaton, is used as a language model.

In our approach, we put the problem of generating harmonic accompaniment in the speech recognition framework, treating melody notes as phonemes and chords as words, using finite state machines for each model. For an input melody sequence and alphabet of possible chords, we estimate the most likely chord sequence.

Code

The source code for this project can be found on GitHub.

Applications

Harmonic Accompaniment Generation App

The accompaniment generation approach was used as the core of an application that generates harmonic accompaniment to melodies created using a simple step sequencer.

The Harmonically Ecosystemic Machine; Sonic Space No. 7

The Harmonically Ecosystemic Machine; Sonic Space No. 7 is an interactive music performance system building on the combined work of the three contributing the artist/researchers, Michael Musick, Jonathan Forsyth, and Rachel Bittner, combining Michael Musick's work with sonic ecosystems and the automatic accompaniment approach described above.

This piece invites participants to contribute musically by playing the instruments placed throughout the active space. In doing so, they join the system as collaborators and interrelated musical agents. In essence this creates a chamber work, in both senses of the word: the piece becomes an improvisation between the system and participants, and a work that activates the entire physical chamber it is installed within.

An excerpt can be heard here.

-

BirdVox, a collaboration between MARL and the Cornell Lab of Ornithology, aims to investigate machine listening techniques for the automatic detection and classification of free-flying bird species from their vocalizations. The ultimate goal is to deploy a network of acoustic sensing devices for real-time monitoring of seasonal bird migratory patterns, particularly the determination of the precise timing of passage for each species.

Find out more

Further information including news updates, publications, and project collaborators is available at the BirdVox website.

-

The task of cover song identification is a relevant one in the field of Music Informatics Research. We have been working on methods to identify covers in large datasets, more specifically on the Million Song Dataset. Our approach, that makes use of various machine learning algorithms (sparse coding, linear discriminative analysis), outperforms previous results for this same task.

Source Code

The source code for this project can be found on github.

Acknowledgements

This project was supported by the “Caja Madrid” Foundation and the NSF.

-

The general music learning public places a high demand on chord-based representations of popular music, as evidenced by large online communities surrounding websites like e-chords or Ultimate Guitar. Given the prerequisite skill necessary to manually identify chords from recorded audio, there is considerable motivation to develop automated systems capable of reliably performing this task. The goal of automatic chord estimation research is to develop systems that produce time-aligned sequences of chords from a given music signal.

Source Code

The source code for this project can be found on github.

-

Gordon is a database management system for working with large audio collections. For the most part it is intended for research. Gordon includes a database backend, web server and python API. Gordon will automatically import music collections into the database and try to resolve tracks, albums and artists to the MusicBrainz service.

Gordon is named after the character Rob Gordon from the Nick Hornby book High Fidelity. This was also a movie with John Cusack playing Gordon (and Jack Black playing Barry, another great character).

-

The NYU Holodeck is an NSF MRI Track 2 Development project that will create an immersive, collaborative, virtual/ physical research environment providing unparalleled tools for research collaborations, intellectual exploration, and creative output.

To prototype the transition from projection to functional AR, MR and VR simulations, our interdisciplinary team will develop an instrument and educational environment that facilitates enticing research and creates an experiential supercomputing infrastructure that empowers students, faculty, and researchers to develop new knowledge and disseminate powerful new integrated, multimodal tools and techniques.

Audio

The audio capabilities of the instrument will use loudspeaker and HRTF-processed headphones, capable of reproducing high quality spatial audio. High quality headphones (e.g. Sennheiser HD650) will be equipped with Head-Related Transfer Function (HRTF) processing technology through the Max/MSP software. HRTFs will be personally measured using ScanIR - the impulse response measurement software developed at NYU, or user selected using procedures such scan IR, an impulse response software developed at NYU.

The instrument will be the first integrated auditory system, comprised of the most advanced audio reproduction systems that is tightly coupled with multimodal capacities across all equipment categories.

-

Force Feedback for Mobile Devices

(Fortissimo and more)

We developed an effective and simple technique to add force feedback to any mobile computing device by using the accelerometer data and attaching a small foam padding to the device. We call this technique Fortissimo.

We also explored other ways to enhance the expressivity of the devices by adding inexpensive hardware to them. These allow a more realistic feel to the device, especially when doing a musical performance.

We developed a technology that uses the front camera of the devices to recognize different hand gestures. These gestures (and the distance to the camera) are attached to various synthesis parameters. We called this prototype AirSynth.

-

The Sonic Spaces Project, is comprised of the composition of and research into sonic ecosystems. These sonic ecosystems are a sub-type of interactive music systems that model relationship principles from natural soundscapes and whose interface is the entire sonic space of a room. Interactive music systems rely on related disciplines such as music informatics, computer science, soundscape art, soundscape ecology, experience design, and interactive art to create music. Interactive music systems can be used as; collaborators with human-performers, as an extension of an instrument, to assist with composition of notated music, or to make music with other machines. Sonic ecosystems are interactive music systems that are comprised of one or more software-agents, which listen to each other, as well as any other human-agents in the installation space. The software-agents of these systems listen for specific acoustic properties in the sonic energy (also referred to as sound, or music). If the sonic energy in the room matches these specifications, then the software-agent consumes that music, processes the music, and returns this transformed music to the space. Other software-agents can then transform this music or sonic energy into something new that is then also played back into the space.

The research and composition of these sonic ecosystems has been labeled as The Sonic Spaces Project. The project has been developing compositional approaches for this type of interactive music system since 2011, which has resulted in the completion of a number of distinct systems, each consisting of multiple iterations. This work has been inspired and informed by a number of artists and researchers, as well as from soundwalk practices.

-

The objectives of SONYC are to create technological solutions for:

- The systematic, constant monitoring of noise pollution at city scale.

- The accurate description of acoustic environments in terms of its composing sources.

- Broadening citizen participation in noise reporting and mitigation.

- Enabling city agencies to take effective, information-driven action for noise mitigation.

Find out more:

Further information including news updates, publications, and project collaborators is available at the SONYC website.

Acknowledgments

This work is supported by a seed grant from New York University’s Center for Urban Science and Progress (CUSP).