Classroom Orchestration Assistant Based on Automated Ambient Sound Classification

The objective of this collaborative project between LEARN and the Music and Audio Research Laboratory (MARL) is to build and evaluate (both technically and pedagogically) a prototype system to automatically and unobtrusively i) detect, and ii) register the type and duration of different pedagogical activities that occur in the classroom. This project aims to provide the instructor with real-time reflective feedback to support their classroom orchestration strategies through alerts, analytic summaries, and visualizations.

The main expected impact of this project is to improve teaching practices by supporting instructors with evidence-based reflection and decision-making with little additional effort during the data-collecting and analyzing process. Additionally, this project will serve as an example of how machine learning and AI tools can be seamlessly integrated into pedagogical practice to augment the capabilities of both instructors and students.

Presentation Feedback Tool

The Presentation Feedback Tool is a system to facilitate the acquisition of oral presentation skills. This low-cost system uses simple sensors, paired with advanced multimodal signal analysis to automatically generate feedback reports that help individuals to identify problems with their body language, speech or slides. This system can be used autonomously, privately and repeatedly by students and instructors to practice and improve basic presentation skills.

Instrumented Learning Spaces

The Working Group on Instrumented Learning Spaces is a collaboration between NYU's Tandon School of Engineering and LEARN, funded by a subaward from the Center for Innovative Research on CyberLearning (CIRCL). This project involves coordinating a virtual working group and an in-person gathering in the Tandon Makerspace to explore affordances, constraints, and data infrastructure requirements for high-fidelity capture of performance, learning, and collaboration in active spaces. Products will include a shared, multi-modal data archive which researchers can explore to probe the possibilities for analyzing such novel data streams as well as a Rapid Community Report.

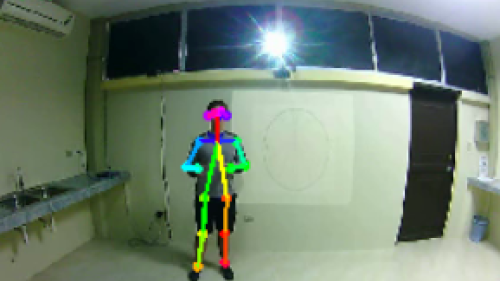

Integrating Physical Computing and Data Science in Movement Based Learning

This project, supported by an award from the National Science Foundation, is a collaboration between LEARN, STEM from Dance and University of Colorado Boulder to examine how to integrate machine learning, data science, and physical computing in the context of movement based learning in dance and cheerleading. Students will learn to create computing systems with programmable electronics worn on the body, use those systems to create statistical models of movement and gesture, and then apply the models in a digital experiential learning environment.